Is there madness in our method?

Speech

Chair Michael Brennan addressed the 33rd Conference of the History of Economic Thought Society.

Download the speech

Read the speech

The emotion of art is impersonal. And the poet cannot reach this impersonality without surrendering himself wholly to the work to be done. And he is not likely to know what is to be done unless he lives in what is not merely the present, but the present moment of the past; unless he is conscious, not of what is dead, but of what is already living. (TS Eliot)

Why should we bother with the history of economic thought?

It’s not an idle question. If we regard economics as a pure ‘objective’ science, with consistent progress and each discovery building on the last, then we shouldn’t really care how we came to know what we know – it would be enough just to know it.

Do we read dead economists because all their theories are equally valid for their time and place? Do we think of economics as like an evolving literary canon, with each new work interpreted in light of the old and the old re-interpreted in light of the new as TS Eliot suggested?

Maybe, but this can’t be the whole story. After all, it’s possible to be wrong in economics. A purely relativist account doesn’t cut it.

Economics isn’t physics, but it does progress over time. There are genuine discoveries and breakthroughs, but they tend to come in an uneven, non-linear and context-specific way. Popper contended that all scientific ‘truths’ are conditional; they seem particularly so in economics. The profession is more loosely bound around its shared corpus of knowledge and its understanding of technique than the ‘hard’ sciences of physics or chemistry.

But would any of us deny that economics has made advances?

Think of Smith’s discussion of the division of labour; Ricardo’s theory of land rent, later generalised by the marginalists; the marginal revolution itself, a remarkable case of simultaneous ‘discovery’ by economists as different as Carl Menger, Leon Walras and WS Jevons; the theory of public goods; game theory more generally. Don’t all of these examples represent in some way a discovery or advance, an improvement in our understanding of economic phenomena in the real world?

But this progress is jagged. There can be two steps forward and one step back; we embrace the new but forget something of the old. We get carried along by a fresh intellectual current and we can fall for the fallacy that there is but one true way to ‘do economics’, only later realising what we have inadvertently left behind.

So economics, perhaps more than other sciences, has to be methodologically self-aware, and knowing your history helps.

In the face of any new wave, it pays to ask not just ‘where will this get us?’ but also ‘where could this take us off course?’. What is it that we risk forgetting in all the excitement?

To do that you need some vague sense of true north. In what follows, I merely offer my own perspective. I am not a scholar, but a policy practitioner. Moreover, these views are just mine: I cannot talk on behalf of the Productivity Commission, which is full of smart people who (one would hope) would disagree with at least part of my account.

I would emphasise three things about economics, traits that, put together, are central to the discipline and which we would not want to lose.

First, it is about people. Economics (as per one treatise) is a study of human action. Not microbes or molecules; not billiard balls or falling objects; and not heroes or saints. Just people – who act purposively, with the usual mix of strengths and frailties, cognitive and moral.

Second, it is a social science. People don’t just act – they interact. They tend to truck, barter and exchange, as Smith put it. One person’s actions shape another’s incentives and vice versa. Economics highlights the way individual actions aggregate into patterns, which in turn shape and re-shape the very context for individual action in the manner of a feedback loop. This is a trait shared by traditions as diverse as Walrasian general equilibrium, Hayek’s Use of Knowledge in Society and Keynesian insights into macro adjustments in the face of uncoordinated expectations. Economics has an account of equilibrium and, importantly, of disequilibrium.

Third, it aspires to generality. Economics aims to find regularities; to better understand the world, not just interpret an individual event.

This is my own amateur typology – by no means perfect. My contention is that economics risks going off course when it loses sight of any or all of these things.

I will illustrate this by talking about two intellectual currents: the rise and dominance of neoclassical economics, with its tendency to mathematical formalism, and the ongoing empirical revolution, with its focus on experiments and quasi-experimental econometric techniques.

Both have been positive developments. I think the benefits of neoclassicism outweighed the costs, and that will probably be true of the empirical turn. But there are costs, and there are risks if we don’t remain humble about the inevitable limitations of each intellectual wave.

The Neoclassical method

Neoclassical economics really grew out of the marginal revolution of the 1870s but arguably achieved its full dominance, including the mathematical formalism, after the Second World War. It had rigour and epistemic ambition.

In terms of my typology, it nailed the generality component: aiming for widely applicable insights (mainly theoretical) to the point of economics imperialism – uniting law, politics and the family under the economist’s analytic gaze.

It was also conscious of the social component. The focus on equilibrium formalised the process I mentioned earlier: how price taking agents, through their combined and unintended actions, generate market outcomes which in turn set the prices shaping individual choice.

But it arguably lost sight of the human. I don’t mean that neoclassical economics was uncaring. Quite the contrary: it brought welfare (i.e. well-being) considerations into the foreground. Once Menger and co. had ‘discovered’ marginal utility, it allowed the Paretos, Pigous and Samuelsons to explore the welfare implications of markets, market failures and corrective policies.

Where neoclassicism lost the human was in the specifics of the model itself. The people (or representative agents) in the model are generally assumed to solve a constrained optimisation – maximising utility subject to a budget constraint, or profits according to a production function and factor prices. They generally only change behaviour in the face of an externally generated ‘shock’.

This has been central to the neoclassical approach. The models were neat, and powerful in generating predictions based on their assumptions. But one cost was that the agents within them ceased to resemble people, so much as automatons.

They responded to stimuli rather than actively making choices. Their ‘actions’ were not really actions at all, dictated as they were by the first order conditions – effectively pre-determined by the parameters and functional form of the model.

Agents (usually) had perfect information, so there was no discovery, no novelty, no innovation. In fact, in the competitive equilibrium there was no competition as such, not in the active, bustling sense we would recognise from the real economy. Attempts to add stochastic elements – say error terms with a specified distribution, or a stock of knowledge based on new ideas that arrive according to a random process (of given parameters) – don’t fundamentally change this reality.

Does this really matter? After all, the theory is just allegory, a stylised ideal against which to better understand the world as it is, including to isolate where the real economy departs from said ideal.

The answer is that it matters when the allegory starts to obscure as much as it reveals. The theory largely left out the frictions, but the frictions (and human response to them) turn out to be among the most interesting and important things going on in a modern economy. The theory also struggled with the brute economic fact – plain for all to see – that economic change, growth and progress are primarily driven from within the system rather than from exogenous shocks.

Pyka and Saviotti described this phenomenon in connection with neoclassical growth models:

There is no explicit recognition of changes in the ways economic activities are organised, for example the rise of the factory system, and later the modern corporation and mass marketing or the stock market and other modern financial institutions, the rise and decline of labour unions, and the continuing advance of science, or the changing roles of government as factors influencing the growth process …

I reflect on Tim Harford’s fascinating book, The 50 Inventions that Shaped the Modern Economy, in which he tells the story of innovations ranging from barbed wire to double entry book-keeping, and the people and struggles that brought them about.

I wonder what I would have made of it, as a neo-classically trained economics graduate in the mid-1990s. I would have found it fascinating, no doubt. I presume I would have found it economically relevant, but perhaps not the main game – not the central subject matter of economics.

If pressed, I might have noted that all these innovations were effectively exogenous forces: facts about the external world that an economist would take as given. A series of external forces that impacted on the economy, with implications for key economic variables like relative prices, factor returns and resource allocation.

Perhaps all these innovations could all be put into the bucket labelled ‘A’ – the exogenous technology parameter in a neoclassical growth model. Perhaps they could be endogenized via an equation linking knowledge to research effort.

But that doesn’t really solve the problem. It might improve my intuitive understanding of the process somewhat, but the logic is still essentially circular. If I convinced myself that I had said something important about the drivers and process of technological advance, I would be very much mistaken.

The English author GK Chesterton (certainly no economist), drew an apt connection between the determinist and the madman:

As an explanation of the world, materialism has a sort of insane simplicity. It has just the quality of the madman’s argument; we have at once the sense of it covering everything and the sense of it leaving everything out. Contemplate some able and sincere materialist, as, for instance, Mr. McCabe, and you will have exactly this unique sensation. He understands everything, and everything does not seem worth understanding. His cosmos may be complete in every rivet and cog-wheel, but still his cosmos is smaller than our world.

Arguably the weakness in the neoclassical frame stems in part from its strict determinism – it’s tendency to explain economic phenomena as the pre-determined and inevitable result of the model’s core assumptions, occasionally shocked from the outside. It explains everything – or something very completely – and yet somehow leaves so much out.

A symptom of the mathematical formalism of the neoclassical approach is that it creates a strict duality between factors that are exogenous (unexplained and determined outside the model) and those which are endogenous (internal but pre-determined, at least within given parameters).

To draw this out, note the stark contrast with economic frameworks that sit away from the neoclassical tradition, such as Joseph Schumpeter’s treatment of the role of the entrepreneur in economic progress. In part two of the Theory of Economic Development, Schumpeter starts to move the discussion on from the circular flow – his steady state straw man, which is ripe for disruption.

Here are two quotes, the first describing his process of economic development:

It is spontaneous and discontinuous change in the channels of the flow, disturbance of the equilibrium, which forever alters and displaces the equilibrium state previously existing.

Which sounds a lot like an exogenous shock. Until you read on to find:

By development therefore, we shall understand only such changes in economic life as are not forced upon it from without but arise by its own initiative, from within. [emphasis added].

So, Schumpeter’s entrepreneur, the prime mover of economic development, is a disrupter who brings about discontinuity, who blows up the pre-existing order. And yet Schumpeter is adamant that this happens from within the system, within what we can loosely call his ‘model’. Endogenous but undetermined.

I confess when I first read this, I thought it a contradiction. But that really just reflects a narrow view of what constitutes an economic ‘model’.

Geoffrey Hodgson has pointed out that innovation relies on acts of individual creativity and choice, but as he said: “Genuine creativity, real choices and willed changes of purpose mean that human action must contain an element of indeterminacy in the sense of an uncaused cause”.

This is not to say that modelling based on general equilibrium (and optimising agents) has no place. Used well, it can support intuition in ways that are not obvious to the naked eye. We have used it at the PC to better understand the potential impact of external shocks or large-scale policy change. We are using it as part of our current productivity review.

But it has limits, most notably in describing the spontaneous change that comes from within the economic system itself, which as it turns out, is where much of the action is.

Arguably economics has made its greatest strides when preserving a space between the exogenous and (my term) the strictly endogenous or model-determined: an in-between category of free action within the ‘model’ (broadly conceived).

That is, an analysis of genuinely ‘choosing’ agents, whose actions are not predetermined by parameter values and functional forms but who retain the basic tendency to purposive (or weakly rational) behaviour in their interactions with others (both competitive and cooperative).

This ‘in between’ is a region where agents can roam, discover, make and change plans, trade, make promises, renege, show faith, agree on rules or gradually shape norms. One where economists can still seek findings that are generalisable in a useful way, even with some quantification, if not mathematical precision. And ideally where economists retain the ability to say something about welfare implications.

I think of economists as diverse as Hayek, Coase and James Buchanan on the one hand, and Joan Robinson on the other; or Richard Nelson and Sidney Winter on growth; or (noting my earlier Schumpeter reference) Israel Kirzner’s description of entrepreneurship. I think also of Armen Alchian’s famous piece on uncertainty, evolution and economic theory.

All of these contributions (and they are a pretty random selection) share something of that vision of purposive, rather than parameter-driven, behaviour and change from within the system or model, neither exogenous nor pre-determined.

The point is that formalism eliminates that in-between. It shrinks the space. And we lose some important understanding as a result, whatever benefits we might also gain.

The Empirical Revolution

So what should we make of the next great intellectual current, namely the empirical turn in modern economics? It is 40 years since Ed Leamer talked about ‘taking the con out of econometrics’.

In 2021, Joshua Angrist, David Card and Guido Imbens won the Nobel Prize for their work on experimental and quasi experimental approaches in applied microeconomics. So a lot has changed.

Angrist describes it as the Credibility Revolution, driven by new data, better econometric technique, and a renewed focus on thoughtful research design.

Any observer of the profession can see the influence and impact of this movement, particularly on young graduates. The focus is squarely on causal inference, that is, identifying causation – usually after the event. Having observed effect B, can we say with confidence that it was caused by action/policy/event A? And how big was the effect on average, relative to a (real or synthetic) control group? That is, what is the average treatment effect (ATE)?

Where the stylised neoclassicist might assume a parameter which affects behaviour in the model, the modern empiricist starts with observing human behaviour to try and work out the sign and size of the parameter.

I note in passing, that both approaches imply a slightly mechanistic world view; a presumption that the central task of economics is to generate knowledge about underlying causal forces. A sort of billiard ball view of economics.

It’s a well-known trope that correlation is not causation. Finding causal effects in data – to discern the actual impact of a policy or event – is hard because the counterfactual is usually unobservable. Once a ‘treatment’ is administered, we cannot directly observe what would have happened without it.

But randomised trials and quasi-experimental methods (like instrumental variables, regression discontinuity and difference in difference approaches) can give us some insight. When we randomise (or find instances of naturally occurring randomisation), we can have more confidence that the counterfactual is proxied by the experience of the ‘control’ group.

Of course, in the real world, with its many potentially confounding variables, there is still a great deal of complexity in understanding the conditions under which this really holds true.

One of the reasons economists are well suited to this work is that our theoretical framework helps to locate the potential threats to validity – the instances of reverse causality, or omitted variables that might bias a particular specification.

So this approach seems a good fit for economists. It gives us stronger empirical results and it has arguably re-focused the profession on real world issues and policy relevance – not least in program evaluation. This all seems to the good. What’s the downside?

Back to my typology, one issue is that of generality. Experimental and quasi-experimental research designs can be quite context specific. But policymakers seek transferable, generalisable results – a program that can be scaled up or an overseas policy that can be replicated.

The average treatment effect from a given sample does not always generalise to another context. Nor does it tell you what will happen in the case of a particular individual.

As Angus Deaton and Nancy Cartwright put it, “The literature discussing RCTs has paid more attention to obtaining results than to considering what can justifiably be done with them”.

The risk of over-selling the applicability of results is real, not least when economists get more focused on econometric technique than policy implications. From time to time in policy discourse, one hears the claim that we ‘know’ some general truth as a result of randomised trials or other empirical work. In fact, what we ‘know’ is probably more specific to time, place and sample.

Advocates will acknowledge this but say that the answer is more and better empirical work – more piecemeal results that will gradually build a knowledge base, brick by brick.

It is an open question what sort of knowledge base we will build up by that method over the long term. Plausibly, we will get better estimates of some structural parameters economists use for other modelling purposes, such as a price elasticity for a type of good.

And there are times when an average treatment effect tells us all that we want to know, as in a program evaluation. Did the program produce the desired result in general? If yes, we should continue.

Will we also gain transferable, generalised understanding about complex social policy issues in the real world via individual experiments and quasi experiments? I think the answer is a very tentative yes. The question is how broad and how firm that knowledge base will be. As always, economists will have to show humility about what we truly ‘know’.

But there is a further issue, which goes to the social/interactive element of my earlier typology.

Usually in economics, an empirical result about A leading to B is not an end in itself. It is just the start. We really want to know what the result means for subsequent behaviour and interaction, and welfare. The Nash equilibrium, say, and whether it looks like a social optimum. That is the distinctly economic bit.

As James Heckman said of the narrow ‘program evaluation’ approach:

… the economic questions answered and policy relevance of the treatment effects featured in the program evaluation approach are often very unclear.

He goes on to say of these models that:

… they do not allow for interpersonal interactions inside and outside of markets in determining outcomes that are at the heart of game theory, general equilibrium theory and models of social interaction and contagion.

Deaton and Cartwright give a hypothetical example of an experimental result in agriculture that improves crop yields. As they indicate, even if the experiment has internal and external validity, what if demand in the relevant market is price inelastic? Then the widespread roll-out of the treatment could reduce prices and revenues and possibly leave farmers worse off!

One might add that the interesting economic question then becomes: what might farmers do about this? And would consumers be happy about the collective bargain farmers might make to overcome their prisoner’s dilemma?

Deaton and Cartwright conclude:

… without the structure that allows us to place RCT results in context, or to understand the mechanisms behind those results, not only can we not transport whether ‘it works’ elsewhere, but we cannot do one of the standard tasks of economics, which is to say whether the intervention is actually welfare improving. Without knowing why things happen and why people do things, we run the risk of worthless casual (fairy story) causal theorising and have given up on one of the central tasks of economics and other social sciences.

Again, to be clear, this focus on data and empirical rigour is to be welcomed and represents a big opportunity. We just have to keep reminding ourselves that it’s not everything. It is a complement to a strong conceptual framework and qualitative understanding of real-world context.

A final aside: I know it is just a matter of vocabulary, but I confess to some squeamishness about the language of ‘experiment’, ‘treatment’ and ‘control’ in social policy settings (that human thing again). The knowledge (supposing it to be knowledge) that cause A led to effect B seems relevant to the omnipotent central planner, with the world spread out before them like a chess board. But that seems more like engineering than economics.

To me, the question for economists is not how the planner can fix the world, but how everyday people go about trying to improve theirs. And what are the policies, settings and institutional rules that help or hinder them in the process?

A policy case study: working from home

As noted above, my perspective on method and history of economic thought is that of a policy practitioner, not a scholar. So I think about it in the context of policy issues. Take one issue of a contemporary interest: the rise of working from home during and after the COVID pandemic.

What should we make of it? What, if anything, is the role for government policy? And how might economists think about it?

I use this example purely to knock down some straw men – that is, to demonstrate the weakness of an exclusive focus on any single approach. If the issue had arisen 30 years ago and was the subject of my honours thesis, I would have done the neoclassical thing: sketched out a basic constrained maximisation model.

In fact, at the PC we did just that (as part of our overall approach): representative firm, price taking and profit maximising. Utility maximising household with a labour-leisure choice and a budget constraint.

That sort of model does demonstrate some insights. For example, if there are now two types of labour (from home and the workplace) but only one wage, then the employer will tend to set the amount of work from home at the point where the marginal product of labour in each setting is equalised.

That is highly unlikely to be the same point at which the household’s marginal dis-utilities of labour (of the two types) are equalised.

So at the margin, there could be a tension. Probably the boss wants less work from home and the worker wants more. If wages could fully adjust, the story might be different. This is a useful insight, but as Chesterton might say: it explains something, but what a lot it leaves out!

The firm and household are assumed to have perfect knowledge and well understood preferences. But this is hardly realistic in a case like the sudden uptake of remote work, where we are all learning as we go.

Also, the firm and household are representative agents, but this too misses the point – it is the heterogeneity of firms and workers that is of interest. Some are better suited to remote work than others. Some want it more. Some not at all. Some workers will switch jobs, some firms will find workers with a more compatible set of preferences. Many will invest in ways to make remote work ‘work’ for them.

These effects, the switching and the learning, will alter the static effect of my model, no doubt quite profoundly.

What would the honours student of today do? Perhaps run an RCT, or, to showcase some econometric technique, find a quasi-experimental method like a regression discontinuity or instrumental variable to mimic a degree of randomisation.

The outcome will be an average treatment effect. It might find a causal effect: people who started to work from home were, on average and compared to a counterfactual, more (or less) productive, happy, healthy and so on.

Again, this might tell us something useful but also leaves so much out.

First, for the firm, the randomised trial is probably of limited value. Randomisation is helpful when we cannot observe the counterfactual to the treatment. It gives us a proxy in the form of a control group (or the matching sample).

But here the firm has a pretty good sense of the counterfactual, at least in respect of productivity. They know (to a first approximation) how productive people are in the office. They can make an assessment about productivity at home. Moreover, they can do this at the level of the individual, not just the average.

So the average treatment effect on productivity would surely be an extreme lower bound for the firm: they get to choose who works from home, when and how much.

For the policymaker, there is a risk of being misled by a result that furnishes an average treatment effect from a randomised work-from-home trial. Perhaps the trial showed that productivity fell or mental health worsened. If the policymaker then observes that working from home is on the rise in aggregate, they might extrapolate from the trial results and fear adverse societal effects.

But, of course, the average treatment effect would be unreliable. In the real world, the rise in working from home is by people who are not randomised. They are selected. They are selected by an economic system: a constellation of employers, managers, team leaders and workers themselves who are choosing who works from home, which days and under what circumstances, and choosing largely on the basis of their fitness for remote work.

The key is to understand that selection system and how it selects – is it efficient? Is it fair? What are the rules of the game?

I emphasise that there is nothing wrong with the RCT, just its naïve interpretation.

To fully think through the implications of remote work, one would want to do so from a variety of angles: the labour-leisure model might be one, the experimental research design is another. Then you might want to go back and read Ronald Coase or Oliver Williamson on the firm. Or Nelson and Winter on growth and technical change. Or Joel Mokyr on the rise of the factory system.

As it happens, there is a great emerging literature on working from home, such as the work of Bloom, Davis and Barrero.

What characterises that work is its pluralism: gathering empirical evidence from surveys, new patents on remote work technologies, and, yes, random trials, alongside a theoretical framework to support intuition and prediction. Multiple strands of evidence to guide some judgment.

As Ed Leamer said of economists, “We seek patterns and we tell stories”.

That, to me, is a big part of why the history of economic thought matters. It is a protection against fads and monomania, the tendency to fall in love with technique, to think there is only one way to ‘do economics’.

For all the strengths of the erstwhile orthodoxies in economics, they can leave gaps. We often only see the gaps after the event, and only then if we know something about what the orthodoxy replaced.

Returning to Chesterton, he described his religious journey thus:

I did try to found a heresy of my own; and when I had put the last touches to it, I discovered that it was orthodoxy.

Perhaps for many of us it was something of the opposite. We went in search of the economic tradition, and as we made our way through the canon, found it led us towards pluralism.

Hugh Stretton Oration

Chair Danielle Wood delivered the 2024 Hugh Stretton Oration at the University of Adelaide.

Download the oration

Read the oration

I would also like to begin by recognising the Kaurna people as the traditional owners of the land on which we meet today. I acknowledge their deep connection to this place and pay my respects to their elders, past and present and to any Aboriginal or Torres Strait Islander people with us this evening.

Thank you everyone for being here. It is very special for me to be back at the University of Adelaide, the place where I experienced the heady years of my undergraduate Economics degree. Lots of long afternoons in the Reading Room of the Barr Smith library, the occasional equally long one at the Uni Bar (RIP), but an incredible spirit of learning, thinking and debate that was so foundational for me.

A huge thank you to the Provost, Professor John Williams AM, Deputy Vice-Chancellor and Vice-President (Academic) Professor Jennie Shaw and Professor Adam Graycar for having me back. I am honoured to have the Governor Her Excellency the Honourable Frances Adamson in attendance, as well as the University Chancellor, the Honourable Catherine Branson AC KC.

There are also a couple of other very special guests in the audience, my parents Rae and Simon Wood, who are hearing me speak for the first time in a professional setting this evening. And I am grateful that after listening to my almost constant talking from the age of two, they are still willing to come back for more.

Hugh Stretton’s legacy

It is also a huge privilege to have the opportunity to honour the professional contribution of Professor Hugh Stretton AC. Professor Stretton’s incredible intellect and impressive CV has been well detailed by the Vice Chancellor.

Three things stood out to me in reading about his professional life.

The first was his intellectual energy and imagination. I particularly enjoyed the reference provided by one of his former supervisors on his application to lead the School of History:

The first impression is of extremely high intelligence. He uses his gifts quietly, however, and is given as much to listening as to talking... He is quite clearly an exact and energetic scholar, though … I am not at all confident that he will publish either quickly or much. I have no doubt that he would build a School of History soundly and with imagination. 1

Now today, any mention of a relaxed approach to publication might be an automatic disqualifier, but the referee was right about Stretton’s suitability to successfully lead the department.

By the end of his tenure as chair in 1966 the School of History had gained a reputation as one of the best of its kind in Australia. 2 And much of this was down to Stretton’s reputation as a formidable thinker and public intellectual. 3 Ultimately in academia, and in life, the spirit of curiosity counts for much.

The second was Professor Stretton’s gift for turning new ideas into policy practice.

His most famous work, a book on urban planning called Ideas for Australian Cities, was so influential that when applicants were interviewed for positions in the Whitlam government’s Department of Urban Development, the first question was: ‘What do you think of Stretton?’. 4

While becoming the opener for a public service interview is a bar not many of us will reach, that spirit of marrying rigorous evidence with real world policy implications is one that I know that many of us strive for in our work.

And the third and perhaps most notable thing was Stretton’s unwavering belief in fairness and opportunity for all. He believed public thinkers have a responsibility to look for chances to make a difference, to reduce disadvantage and make Australia a place where anyone can prosper.

As he once said: “We should be doing all we can, by old and new means that fit our changing historical conditions, to leave Australia fairer than we found it”. 5

Tonight I hope to give you some ideas about how we take up Stretton’s challenge.

I’m going to take you through what we know about inequality in Australia today.

Using new analysis released this week by the Productivity Commission, I’ll show you the distribution of wealth and income in Australia and how it’s changed over time.

I also want to give you a sneak peek of some research we haven’t yet published on intergenerational mobility. This goes to the important question: how much does who your parents are, go on to influence your life outcomes?

To finish, I’m going to give you a ‘fair go toolbox’ – a set of policy allen keys that can fit the inequality problem at hand.

A few disclaimers...

But first let me start with a few disclaimers, and as I’m an economist rather than a lawyer I’m going to put these right upfront rather than buried in size 6 font in a footnote.

1. Economic inequality is a surprisingly slippery construct

‘The rich are different to you and me’, writer F Scott Fitzgerald once claimed. ‘Yes’, said Hemmingway, ‘they have more money’.

At one level inequality is that simple: some people have more money than others.

But as I will come to, economic inequality measures can vary a lot depending on what we are measuring – do we care about income, consumption or wealth?

And there can be a range of worthwhile questions to ask:

- How are those doing it toughest faring?

- How much do the most well off – say the top 10% or 1% – have compared to others?

- Or how are resources distributed across the population as a whole?

Each can give us different insights.

And that’s before we even get to the question of how opportunities and outcomes change over a person’s lifetime, or vary by gender, age or for First Nations Australians.

So, to manage this complexity, tonight I’m going with the maximalists – or perhaps the Strettonist – approach. I will take you through a range of indicators and cuts of the data to give you a broad account of the state of play. But I also want to talk about what this actually looks like in people’s lives.

As Stretton said:

“I’m not sure that much valuable reform has sprung from high theory about the dynamics of distribution. More has come from ...competent accounting, summarising and insistent publication of the patterns of inequality… and the effects of those distributions on the quality of people’s experience in life.” 6

2. Be a sceptic

It is somewhat uncomfortable to say this as the leader of the organisation that prides itself on evidence-based, often data-driven, analysis. But in almost all data analysis we deal with imperfect data and are forced to make choices about how to address that.

In inequality research those choices can make a big difference to the story.

Very recently this challenge has jumped off the pages of academic journals and on to the front page of the Washington Post with the so called ‘inequality wars’.

On one side of the war you have famous inequality researchers, Thomas Piketty and Emmanuel Saez.

They have spent the better part of two decades analysing inequality, including by using American tax data to document a significant and growing share of US incomes flowing to the top 1%.

It’s rare to get rock star economists, but these guys are it.

(As an aside if you purchased but did not finish Piketty’s hefty Capital in the 21st Century back at the height of Pikettymania in 2011 you are not alone, on some measures it is the second most unfinished book on Kindle, after Hilary Clinton’s autobiography...). 7

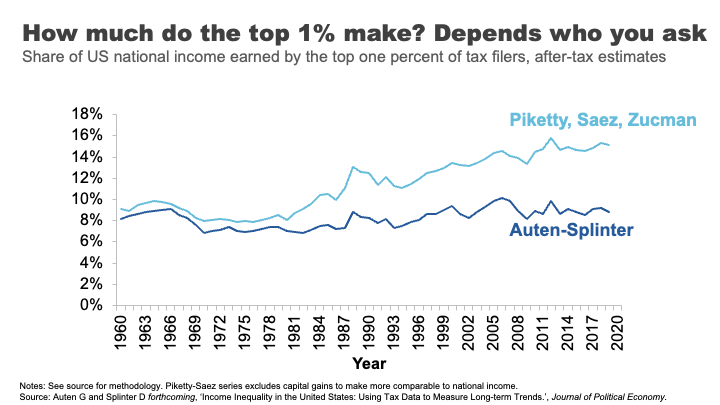

On the other side of the war sit Gerald Auten and David Splinter – their names might not ring any bells.

These two relatively unknown tax code nerds come from the US Treasury Department and Congress Joint Committee on Taxation.

Using the same tax data as Piketty and Saez, they come up with the opposite conclusion: after tax, the share of US income going to the 1 % has barely moved since the 1960s.

Cue much triumph from some commentators and members of the press.

But beyond the simplistic discussions of Piketty and Saez being proved ‘wrong’ was the more nuanced truth – both sets of researchers had made a series of judgments around issues like how to estimate ‘missing money’ not included in tax returns, and how to attribute spending on health, education or defence across the population.

The appropriateness of each of these judgements is now the subject of further debate, but what is clear is that what might seem like technical judgments can sometimes have a big effect on conclusions.

In all the analysis I present tonight we’ve tried to be as robust and open as we can about any judgments made. But I encourage you to keep your sceptics hat on.

3. There will be graphs

I’m an economist, there will be lot of graphs this evening. But I’ll do my best to ‘use my words’ and hopefully we can avoid data overload.

Income inequality in Australia

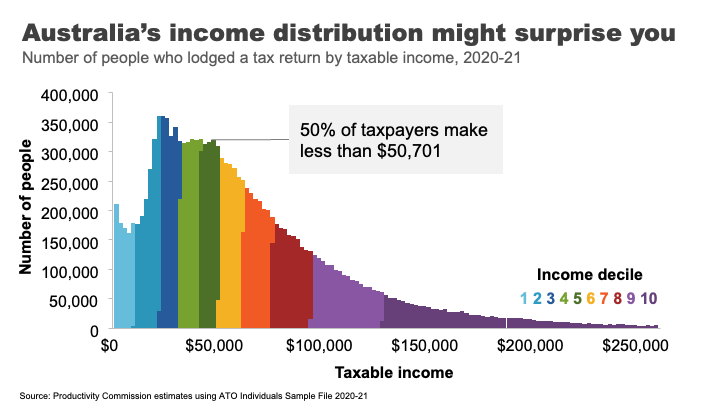

Ok, let’s start with talking about the broad distribution of income in Australia.

I know it’s not always au fait to talk about what you earn in public, so I’ll ask you to do this exercise in your heads. I want you to think about whether you consider yourself to be a low-income earner, a middle-income earner, or a high-income earner.

Let’s see how you went.

Here’s the distribution of Australian taxable income. That is, the income before tax but after you’ve made any allowable deductions.

If your taxable income is over about $51,000, you earn more than 50% of Australians who lodge a tax return. If you earn more than $95,000, that puts you in the top 20%. If you earn $336,000 or above – you are the 1%.

A surprising number of people get this wrong. In fact, the vast majority of us consider ourselves to be ‘middle income earners’. 8 This is probably because the people we tend to live near and associate with are more likely to be in a similar tax bracket to us.

This is presumably why every year or so high earners from the media and political class kick off passionate debates about whether $200,000 is really a high income 9 – while, I suspect, the 97 % of Australians earning less than that amount just roll their eyes.

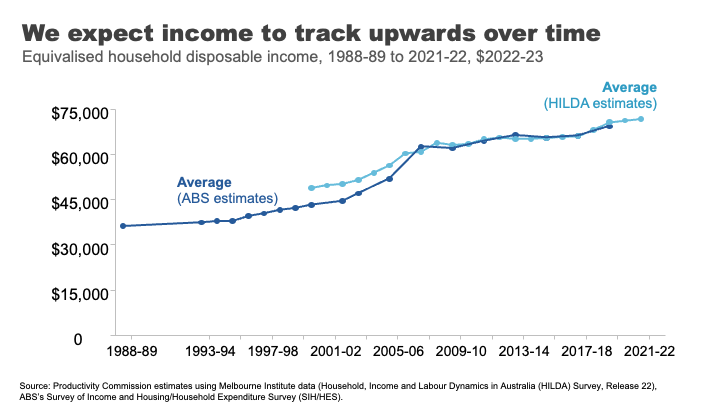

If we move to looking at using disposable income – that is income after tax and transfers – for an average Australian household, we can see that incomes have risen over time.

This is generally what we have come to expect, that outside of short dips during major economic shocks, income growth will continue its long march upwards over time.

And how has this growth been distributed?

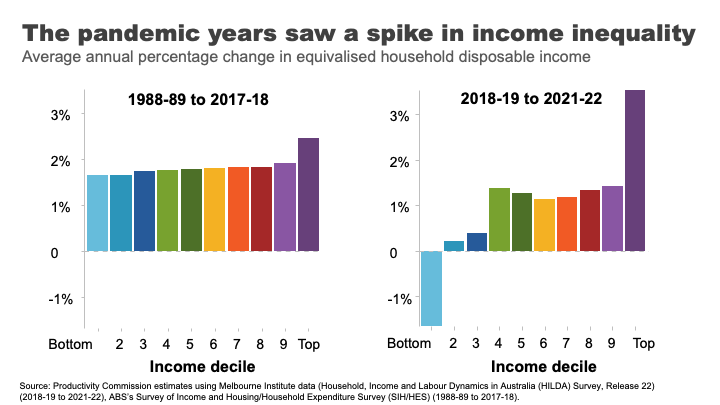

The answer is relatively equally across the population in recent decades.

Indeed, in the 30 years between 1989 and 2019, income grew pretty consistently across the income distribution. Those in the top 10% experienced slightly higher growth than other groups, but nothing like the strong growth in income inequality seen in the US that dominates much of our inequality discourse.

In contrast, the COVID period and its aftermath has seen greater dispersion with incomes at the top growing rapidly and those at the bottom going backwards.

The reasons are complicated, but reflect the roller coaster ride of lockdowns and recession, increases and withdrawals of government supports, and the healthy bounce back and high inflation that followed.

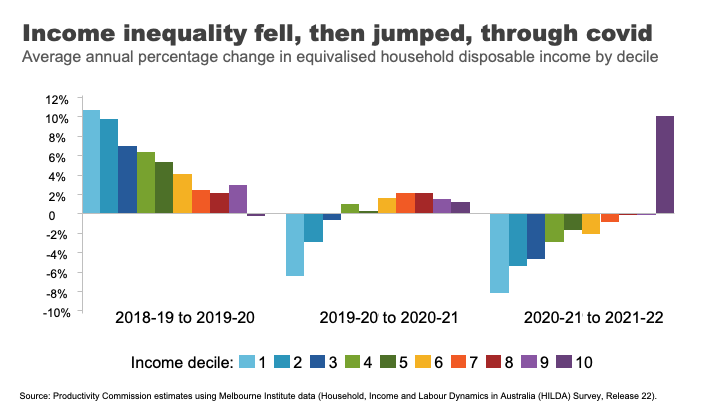

As you can see, the three years of the COVID period actually reflect three quite different inequality dynamics.

At the start of the COVID 19 pandemic, government-imposed lockdowns caused drastic declines in economic activity and widespread job losses. 10 In response, the Australian Government provided substantial support, which cushioned the economic harm across the community.

The effective doubling of the JobSeeker payment, and the flat $1500 per fortnight JobKeeper payment for workers in eligible businesses 11 produced high income growth for those at the bottom and middle of the income distribution.

As the economy re-opened, supports were withdrawn, those gains were reversed.

And despite the strong economy and labour market, high inflation meant real wages went backwards for most workers over the past two years.

In contrast, high income households benefited from rip-roaring growth in business income as well as decent investment income, as the economy recovered.

Overall, and barring any further major shocks, we can probably expect the next few years will bring a return to a more ‘normal’ income growth and certainly to the more consistent patterns seen across the distribution observed in the pre-COVID era.

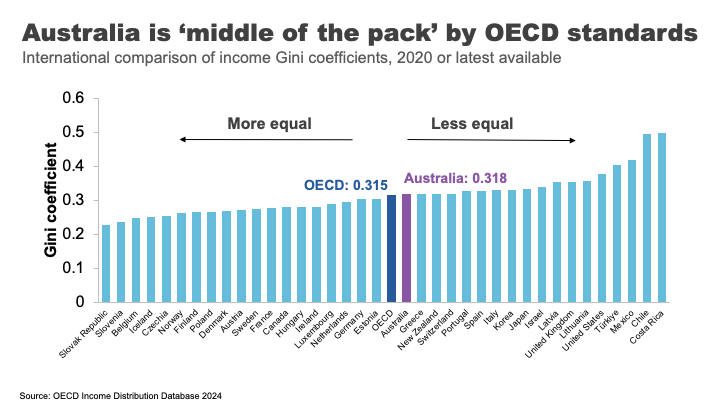

It is also interesting to reflect on how we compare to other nations.

Egalitarianism is tied up with Australian identity. We are the land of the ‘fair go’, a place where your taxi driver, your boss, and even the Prime Minister can all be safely referred to as your ‘mate’.

But does the reality match the mythology?

Not entirely.

Australia’s income inequality is ‘middle of the pack’ by rich-country standards. Comparing based on the Gini coefficient – a measure of overall inequality – Australia is close the OECD average.

Overall, we not as unequal as our friends in the US and the UK, but nor are we as egalitarian as the Nordic countries.

One important reason for these large cross-country differences is differences in tax and transfer policies.

For example, the US starts relatively unequal, but by no means the most. But because they redistribute income less, they end up the most unequal of Western nations. 12 In contrast, Finland has close to US-levels of inequality before taxes and transfers. After, it is one of the most equal nations in the OECD. 13

These are important differences that highlight a point I want to come back to: policy choices matter to inequality.

But now we have a snapshot of how Australians across the distribution have fared over time and relative to elsewhere, I want to turn to another important part of the inequality story: outcomes and opportunities for the most disadvantaged.

Poverty in a rich country

It’s almost 40 years since Bob Hawke declared that no child would live in poverty by 1990. 14

But, according to ACOSS, one in eight people lived below the poverty line in 2019-20, including one in six children. 15

This measure of poverty looks at relative income poverty – it’s set at 50 % of the typical Australian household disposable income, less housing costs.

Some argue it is better to look at absolute measures (for example, the amount of money required to be sure a household can achieve a basic standard of living) or indicators of material deprivation.

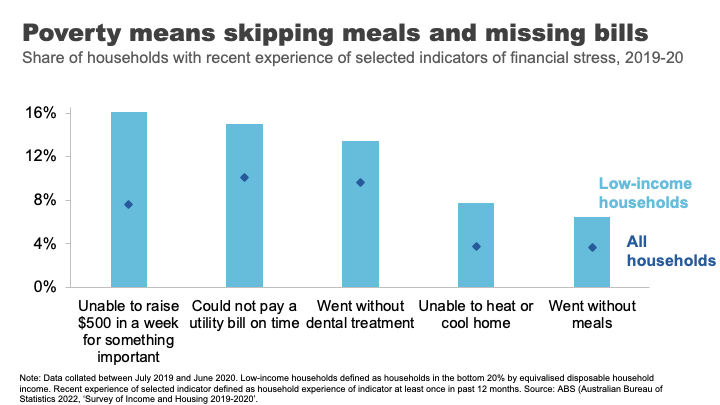

For example, amongst low-income households in 2019-20, 16% were unable to raise $500 for something important, 8% were unable to heat their homes, and 7% went without meals. 16

And it’s that sense of precariousness that pushes the impacts of poverty from the material to the mental. And those impacts can have a long tail.

As journalist Rick Morton writes about his own childhood growing up in a poor household: 17

I saw Mum’s daily, sometimes hourly, battles to stay solvent. I saw how hard she worked and what it did to her body and her mind. The stress of even thinking of it now is difficult to explain. It is built not only into my own mind but also in my flesh. The things I will do to avoid the feeling today. The things I try to do for Mum to make it so she never has to feel it again.

The biggest risk factors for poverty are: being on JobSeeker or Parenting Payment. 18

Policy and poverty remain inextricably linked.

Wealth inequality in Australia

Of course economic differences are not just found in incomes. Wealth or how much money you have ‘behind you’ is an important determinant of outcomes too. Wealth is a buffer – it can be converted into future consumption opportunities and provides a sense of financial security.

That is why we understand the pensioner who owns their home and has $250,000 in the bank is in a materially different economic position to the single part-time worker who records a similar $30,000 income but has few assets.

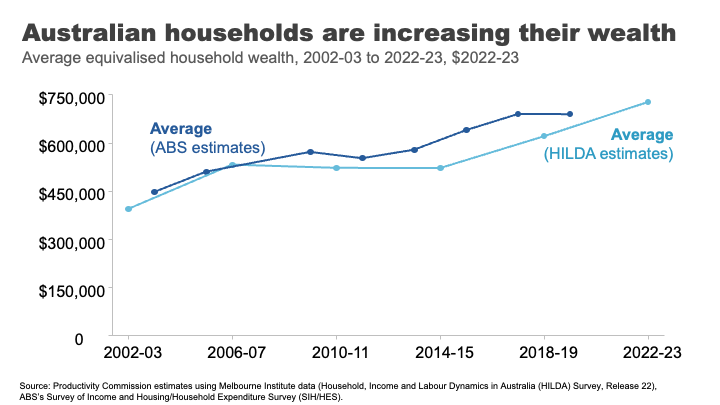

Wealth has grown significantly in Australia in recent decades.

I remember in primary school the shorthand for someone really, really, rich was a ‘millionaire.’ The average Australian household is now more than halfway to that benchmark. Indeed, a person that owns a typical house outright in Clarence Gardens is a ‘millionaire’. 19

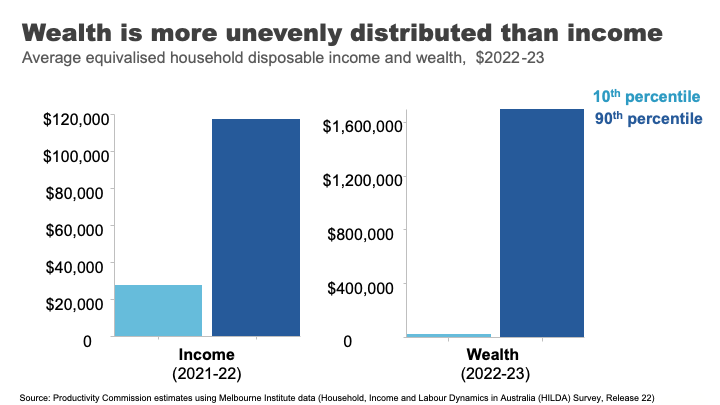

Wealth overall is much less evenly distributed than income.

If we take a household at the 90th %ile for wealth, they have almost 70 times as much wealth as the Australians at the 10th %ile. For income the figure is only four times as much.

Wealth also has much greater extremes.

In his 2013 book on inequality, Battlers and Billionaires, Parliamentarian and economist Andrew Leigh provided a memorable analogy, which I have updated today:

Imagine a ladder on which each rung represents a million dollars of wealth. If we were to put all Australian households on this ladder 50% of us are about halfway to the first rung, the top 10 % are about 1.5 rungs up, the top 1% are reaching for the fifth rung – just high enough to clean the gutters. 20 Gina Rinehart is more than 11kms off the ground. 21

But even with this very long ladder, Australia’s wealth inequality is lower than many other OECD countries. 22

But an important question is how has the distribution changed in recent decades?

The answer is: it’s complicated.

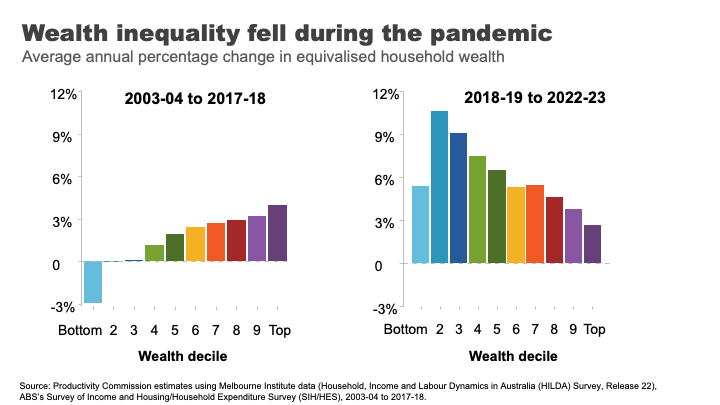

Pre-pandemic, wealth grew faster for the top half of the distribution.

But the pandemic once again, produced some surprising results.

This pattern flipped and wealth grew more quickly overall and significantly faster for lower and lower middle wealth groups during the COVID period.

The two main reasons for this are:

- The strong growth in housing prices, particularly in the regions and the smaller capital cities. This had the biggest impact for homeowners in the lower middle and middle parts of the wealth distribution.

- Higher income from increased government support during the pandemic and fewer opportunities to spend, helped boost bank balances and debt repayment among low-wealth households. 23

And while this is good news for many households at least in the short-term, the longer-term run-up house prices has produced a different set of inequality concerns.

A decaying dream? House prices and inequality across generations

Here I want to stop and reflect on the different ways in which the very strong growth in house prices has impacted economic outcomes for different groups in Australia.

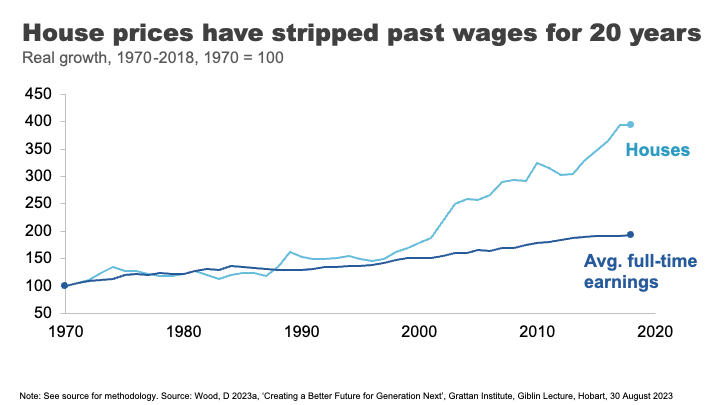

Until the 1990s, house prices broadly tracked growth in incomes. But between 1992 and 2018 they grew at almost three times the pace on average. 24

The effect has been an increase in the upfront barrier to home ownership and increasingly also the ongoing costs for those that are able to clear that hurdle.

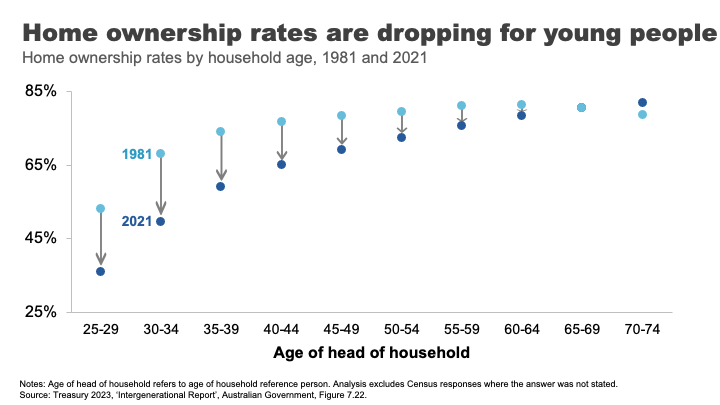

The result, unsurprisingly, has been falling home ownership.

In the early 1980s, when my parents were buying their first home, around 70% of those in their early 30s owned a home. Today that figure is just 50%. And the drops have been particularly acute amongst low-income young people. 25

The declining opportunities for homeownership are a particular source of dissatisfaction and unrest amongst many non-homeowning younger Australians. Amongst the so-called Generation Z non-homeowners, 93% want to own their own home. But only 63% think it is likely that they ever will. 26

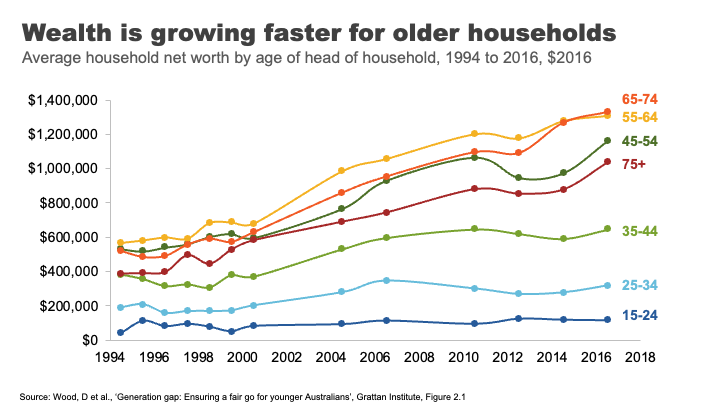

The rise in house prices has also contributed to rising generational disparities in wealth accumulation.

Older households have always had more assets on average than younger ones. But the run up in house prices has created windfall gains for existing homeowners. This has been a major contributor to the rapid growth in wealth among older households.

A household headed by someone aged 65-74 had on average $1.3 million in assets in 2016, up from $900,000 for the same age group in 2004. Rising asset prices over the past seven years mean this figure is almost certainly substantially higher now.

In contrast, the wealth of households under 35 has barely moved in 15 years. And poorer young Australians have less today than poorer young Australians did 15 years ago. 27

Overall the developments in the housing market over recent decades have left many, particularly many older Australians, very well off. But the cost has been considerable housing stress amongst the vulnerable, and a generation of younger Australians who will reach middle age with substantially lower rates of home ownership than their parents.

Land of the fair go? Social mobility in Australia

Now I want to move from the photo to the movie: from talking about disparity in economic outcomes at a point in time to talking about how these outcomes can evolve over someone’s life.

A question that has rightly been of interest to those concerned about inequality, is how does inequality in outcomes influence equality of opportunity. ;Or to be more specific – how much are economic opportunities determined by who are our parents are?

Generational mobility has historically been a hard thing to study. To understand its dynamics we need linked data on parental economic outcomes and those of their children over a long duration.

In the absence of this type of data, at least until recently, people got creative.

In one of my favourite studies, Parliamentarian Andrew Leigh alongside co-authors Gregory Clark and Mike Pottenger, identified rare surnames in the 2014 electoral roll among doctors and university graduates from 1870. They found, nearly 150 years later, that people with those rare surnames are more likely to be in the so called ‘elite’ professions than people with surnames such as Smith.28

Indeed, they found that so called ‘status persistence’ for surnames was as high in Australia as for England or the United States. 29

In somewhat brighter news, more recent studies using linked administrative data point to a more optimistic picture on social mobility in Australia.

Looking at economic outcomes for a million individuals born between 1978 and 1982 Economists Nathan Deutscher and Bhashkar Mazumder find that Australia is one of the more economically mobile advanced economies. 30>

Indeed, they find Australia’s ‘intergenerational elasticity of income’ (a measure of how much your family’s income affects your own) is similar to Canada and close to those of the Nordic countries, and considerably more mobile than places like the United States. 31

In forthcoming work the Productivity Commission uses family-linked tax data that confirms that estimates of intergenerational mobility remain comparatively high.

But in contrast, things may be stickier for those doing it toughest.

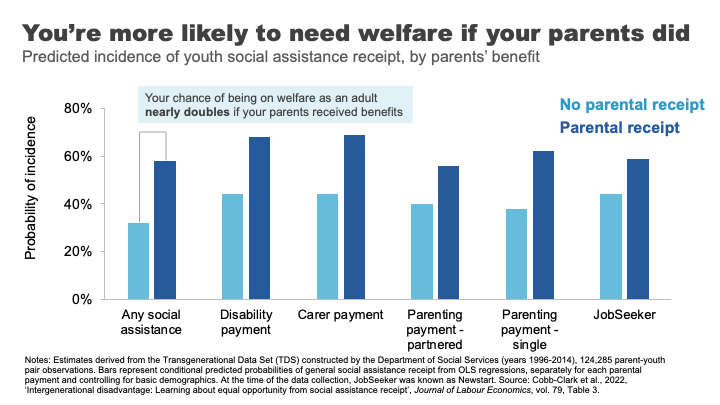

In a 2017 study, Professor Deborah Cobb-Clarke and her co-authors showed that young people are 1.8 times more likely to need social assistance if their parents have a history of receiving social assistance themselves. 32

Consistent with this, the Productivity Commission’s forthcoming work shows that people in their late 20s whose parents received social transfer payments were about one and a half times more likely to receive social transfer payments themselves.

The Cobb-Clarke work showed that these transmission effects were particularly pronounced for disability payments, payments for those with caring responsibilities, and parenting payments for single parents. Interestingly, disadvantage stemming from parents’ poor labour market outcomes was much less persistent. 33

Cobb-Clarke and her co-authors posit that parental disadvantage may be more harmful to children’s later life outcomes if it is more strongly driven by circumstances rather than personal choice.

This aligns with the growing appreciation by economists of the impact of lack of hope or despair in shaping life choices and outcomes. 34

This was the sentiment expressed by a Tasmanian woman on welfare supports: 35

It’s not so much what we are missing out on, it’s the next generation and it is a hard cycle to break because they look at it and think, well, what’s the point? We’re always going to be poor, things are hard, nothing’s going to get better. Why should we bother?

Schools under stress: a red flag for future mobility?

One of the foundational supports for economic mobility is a strong education system.

Indeed, educational attainment has been estimated to explain up to 30 % of the transmission of economic advantage between parents and children. 36

Australia has historically had a strong system of school education that has supported opportunities across the population.

But there are some red flags for future prosperity and mobility that we should heed.

Indeed, despite growing funding in recent years, Australia’s school system has not been delivering the results we want for our young people.

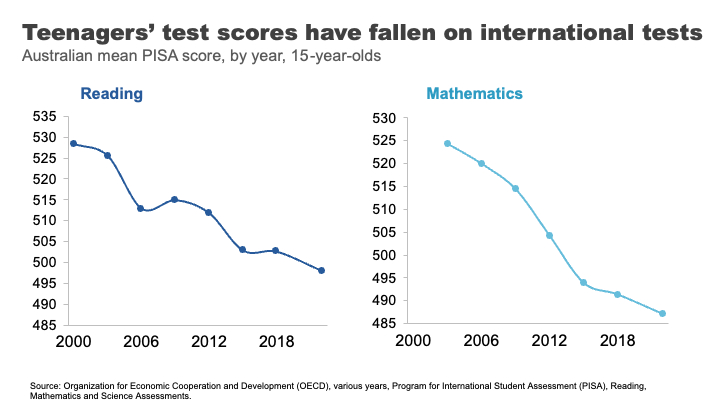

Data from the OECD suggest that the performance of Australian school students in Reading and Maths is going backwards, with significant falls in our levels of achievement since 2000.

Estimates based on this data suggest the average Australian Year 10 student in 2018 was eight months behind in reading compared to where Year 10 students were at the turn of the century, and results have largely flatlined since. 37 We’ve seen even sharper declines in mathematics scores, where the decline for Year 10 students by 2018 was almost a year of learning.

But how much does parental background make a difference to how students fare?

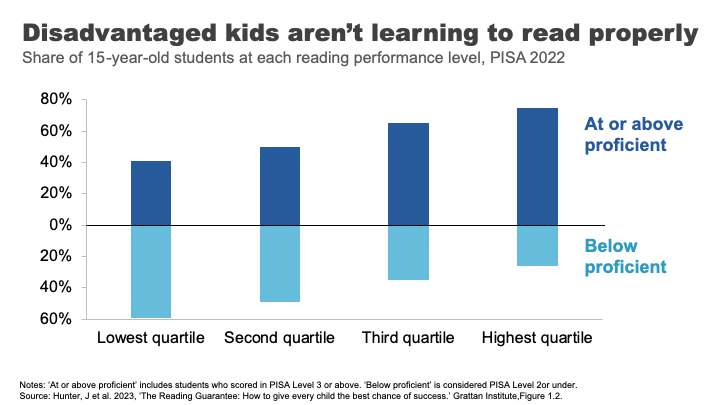

The answer is a lot. More than half of the most economically disadvantaged 15-year-old students in Australia are not proficient readers.

Analysis from the Grattan Institute shows that the disparity in outcomes was worse in Australia than in Canda or the UK and on par with the US. 38

These gaps in performance widen through the schooling process.

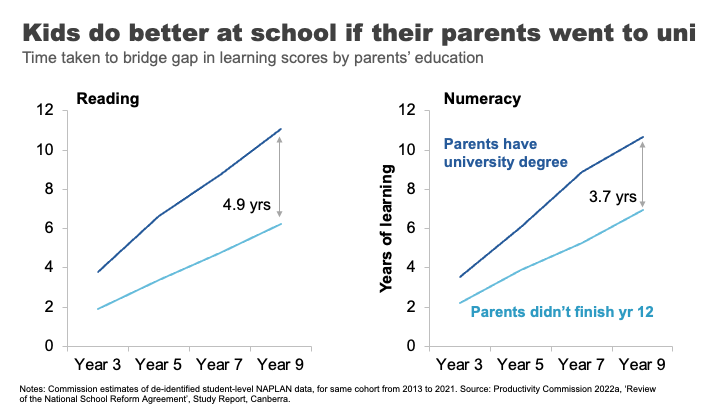

The Productivity Commission looked at this using NAPLAN data. 39 We compared the average reading outcomes of students whose parents did not finish high school to those whose parents have a bachelor’s degree or higher. We found the learning gap – equivalent to almost two years of reading achievement in Year 3, progressively widens to an almost 5-year learning gap by the time students reach Year 9.

For mathematics, the gap widens from 1.3 to almost 4 years.

If we take education as an indicator of both a country’s future economic prosperity and its social mobility – this data must concern us.

It has been pleasing to see senior leaders, including here in South Australia, engage with this issue and its implications. But turning the ship around will require significant shifts in the way we deliver education in Australia.

A nation divided: why mixing matters for mobility

Another less obvious mobility-enhancer is where we grow up, and more specifically, who we grow up with.

US economist Raj Chetty and co-authors made a splash in 2014, when their study using administrative records on the incomes of more than 40 million children and their parents found very large variations in social mobility across the US. For example, they estimated a child from the poorest 20% of families had nearly a 3 times better chance of making it into the top 20% of income earners as an adult if they lived in Silicon Valley rather than Charlotte North Carolina. 40

In a later paper, Chetty and another co-author reinforced the importance of these neighbourhood effects by studying outcomes for families who moved to different parts of the Unites States.

They found that outcomes for children whose families move to a better neighbourhood improve the more time they spend there.

Indeed, every additional year in a ‘good neighbourhood’ sees that child’s outcomes converge closer to the average for that neighbourhood by about 4 %.41

And if you are thinking that this type of locational lottery could only exist in a place as unequal as the United States – think again.

Economist Nathan Deutscher has replicated this work for Australia. 42

And while the dipartites between regions are less pronounced here, we see the same convergence in outcomes, the longer a child is exposed to a ‘good neighbourhood’.

Deutscher finds that place matters most during the teenage years and suggests it might be ‘peer effects’ that explain locational differences in outcomes.

In other words, who you hang around with in those formative years makes a difference. Which may well be a validating result for any parent that has ever uttered the immortal phrase: “If Tanya jumped off a cliff, would you do it too?”.

This is consistent with more recent work that suggests it is economic connectedness – the capacity of low socioeconomic people to make friends with those from higher socioeconomic groups – that is the principal driver of social mobility. 43

And this is the very thing that gets lost as neighbourhoods and schools become more stratified and we participate less in social mixing opportunities. This means that observed declines in the types of activities that help build the social glue – from volunteering, to local sport, to attending church – over time might further erode social mobility.

What’s a policy maker to do?

Where does all this leave policy makers?

How much policy makers should seek to address inequality is not a straightforward question. It has been dissected by philosophers since Plato. And economists have been at intellectual fisticuffs over it for much of the past century.

Today, even the strongest advocates for greater equality will acknowledge that some inequality is inevitable and that it is important to maintain incentives for innovation and effort.

Many of the richest people in the world – Gates, Dyson, Musk – are innovators whose work has reshaped our lives. It is at least partly the ‘size of the prize’ available to successful innovation that drives the efforts and risks of would-be innovators and entrepreneurs.44

On a more relatable level, let’s think of the University we are at tonight. Would we expect students to flow through these gates to spend years of their lives learning about engineering or medicine or economics, and to work long hours while establishing themselves in their career, without some return for these efforts? In other words, incentives are important for growing the pie, even if they result in a somewhat unequal distribution of it.

On the other hand, even many of capitalism’s biggest cheerleaders raise concerns about the social and economic implications of stark economic dispersion.

Recently the IMF has warned that high inequality and especially poor social mobility can impact on long-term economic growth. 45 Others have shown that physical and mental health problems are worse in more unequal societies, predominantly due to the physiological stress of operating within a steep economic hierarchy. 46 And still others have linked rising inequality, or declining social mobility, 47 with the rise of populism as the ‘left behind’ lodge their protests vote against the so called elite.

All of this is to say that targeting inequality is complex. And while the ‘line’ across which inequality flips to doing more harm than good is far from clear, what is clear is that policy makers have a broad set of tools that can help push in their desired direction.

A policy makers’ toolbox

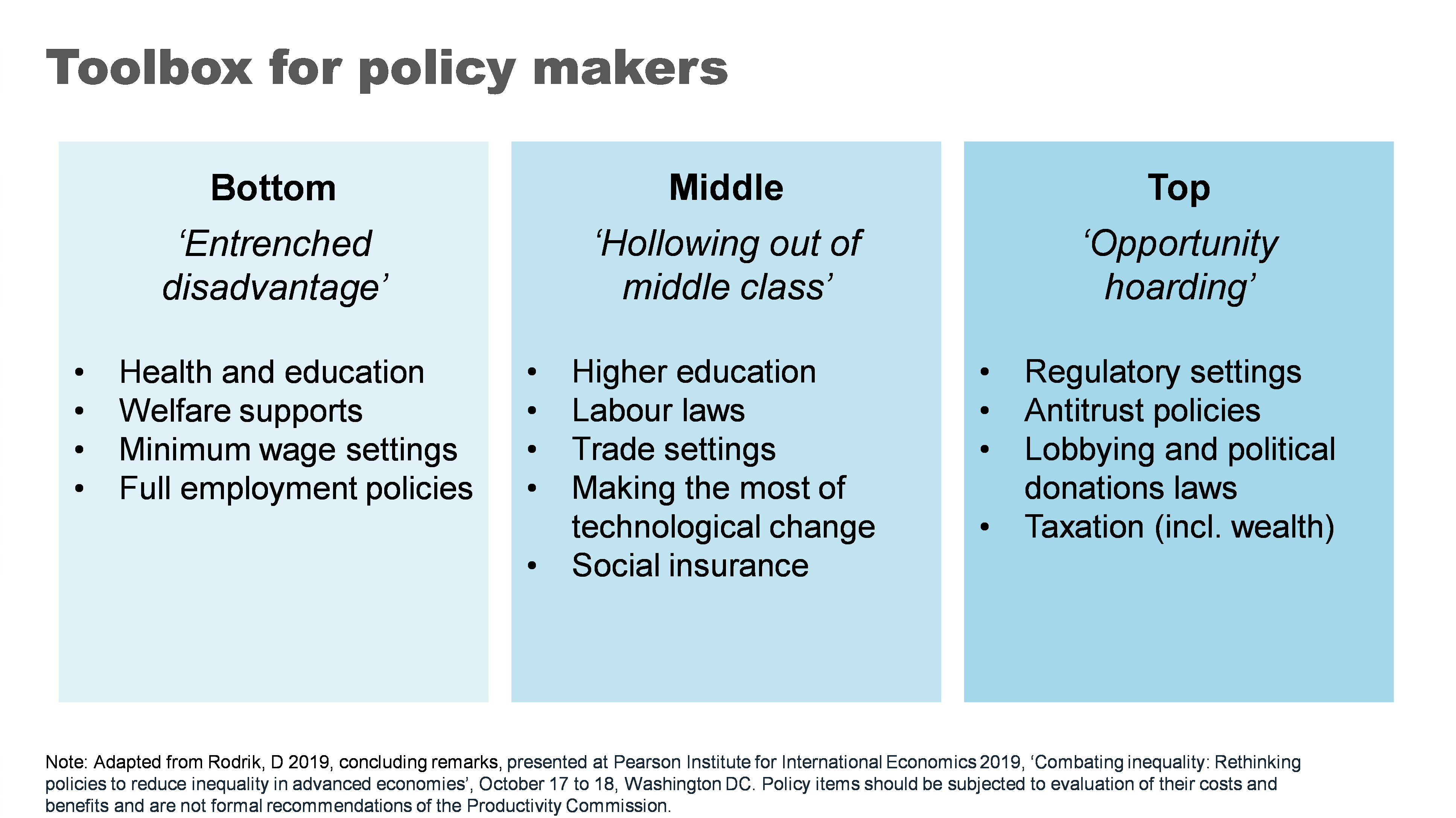

A few years ago, a group of high-profile economists organised an international conference on combatting inequality with a mission of engaging with the full suite of policy responses. 48

Their conclusion, although not revolutionary, provides helpful clarity: that the right policy response depends on why you care about inequality.

In particular, they draw a distinction between policies concerned with:

- outcomes for most disadvantaged – particularly for addressing entrenched poverty

- boosting opportunities for a ‘hollowed out’ middle class – a much greater concern in the United States than here, where, as we have seen, income growth has been broader based

- opportunity hoarding – or the way wealthy people might leverage their economic and political power to entrench their position.

The right policy tool will also depend crucially on whether policy makers are more concerned with equality of opportunity or outcomes.

Below is an adapted version of the taxonomy they created. It shows the breadth – and importantly the targeting of different levers that a would-be inequality buster might pull.

Now I do not advocate for all of the policies proposed. Indeed, whether any of these policies would be a good idea would require careful analysis of the costs and benefits in the particular context you might use them. There are probably good ideas that have been left out too.

But I did want to talk very briefly about four of the ‘biggies’ that I think really matter in an Australian context.

Growing the pie can mean bigger slices for all

Now in a presentation largely focused on distribution of the pie, I want make the case for making the pie bigger.

A cross-country and cross time evaluation suggests that growth is effective in reducing poverty. 49 Indeed, incomes for the bottom 10% are highly correlated with overall economic growth – a rising tide lifts some very important boats. We could put this beyond doubt for Australia by addressing some of the weaknesses in the current social safety net - a point I’ll return to.

The impact of higher economic growth on overall inequality is less clear. 50 But what is clear is that faster economic growth gives governments more room to support more generous welfare policies as well as other social spending on areas like education and health that particularly benefit those at the bottom and middle of the income distribution.

More generally healthy income growth can also support the political ‘buy in’ for these types of policies. 51

As for what governments can do to grow the pie – that would be a whole other lecture. But if the pie is of interest: ‘here’s one I prepared earlier’. My colleagues have published a comprehensive 1000-page guide for governments looking for ways to boost productivity and growth. 52

Fixing the housing mess

A functioning housing system is critical for improving our social and economic outcomes. Building more houses closer to jobs and amenities is needed to help younger and poorer Australians access the same opportunities as previous generations.

Australia’s population has grown strongly over the past two decades and will likely continue to do so. We can choose to push people out to the ever-expanding fringes of our cities or accommodate them through boosting supply in the inner and middle ring suburbs where most would prefer to live.

Allowing greater density in these areas not only expands supply but also boosts variety in housing choices, supporting more of the cross-socio economic mixing critical to social mobility.

After at least two decades of letting the ‘housing market frog’ slowly boil, there have been some positive steps from both Commonwealth and State governments to support the planning changes needed to boost supply. In particular, the Commonwealth government has offered incentives for states to target the construction of 1.2 million new homes over the next five years. 53

Similarly, moves to boost social housing are also a positive step, particularly where they’re targeted to those with the highest need.

Unfortunately, this new-found policy energy has come at the same time as the building industry faces challenges in ramping up.

But over time, if ambitious growth targets can be met, this could be a powerful shift in reducing inequality both within and between generations.

An education revolution?

School education is fundamental to supercharging opportunities for the next generation.

And while some of the problems our system faces are thorny, some of the solutions are surprisingly straightforward.

Our focus should start with getting the basics right – our schools should be supported and held accountable for delivering basic levels of literacy and numeracy. 54 My former colleagues at Grattan have called for a ‘Reading Guarantee’ – whereby governments would commit to ensuring at least 90 % of Australian students learn to read proficiently at school. 55

Supporting schools and teachers to deliver on these basics would require:

- making sure all teachers adopt evidence-based teaching practices such as phonics decoding for reading 56

- providing all schools and teachers access to a bank of well-sequenced high-quality curriculum materials 57

- reducing low value tasks for teachers to free them up to spend time on what really matters, 58 and

- providing better career paths to help schools attract and retain top teachers, and allowing top teachers to support and develop others in the profession. 59

Boosting social safety net

The Federal Government’s Economic Inclusion Committee just released its second report designed to inform the budget process.

Its lead recommendation remained unchanged from last year: to increase the rate of the JobSeeker and related income support payments.

The Committee finds that Australia’s unemployment benefits have been slipping further and further behind community living standards for two decades. 60

And while the recent 10% increase to Commonwealth Rent Assistance will provide much needed relief – as did the extra 15% in the last Budget – the Committee’s report demonstrates that the value of the payments has fallen significantly relative to average rents for the past 25 years. 61

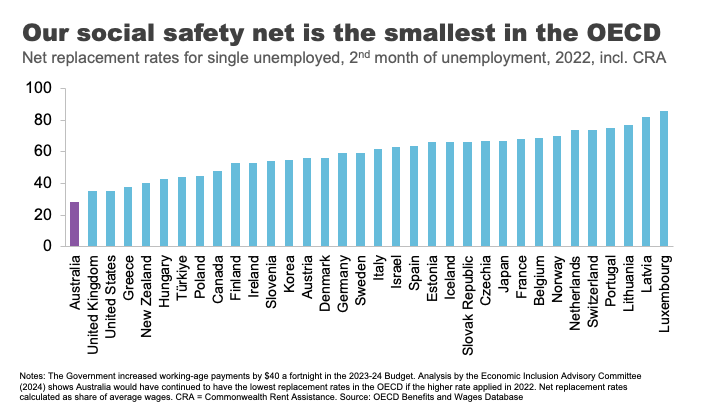

Today, Australia’s payments to the short-term unemployed, including housing benefits, are the least generous in the OECD. 62

No single measure would do more to alleviate poverty than a material change in these payments.

What’s stopping us?

Partly it’s likely to be well-meaning concerns about the impact on incentives to work.

But consistent with previous work, the Economic Inclusion Advisory Committee finds that the negative effect on incentives is likely to be small, because current levels of the payment are so far below incomes from working. 63

Indeed, for those facing economic exclusion, higher income support payments may improve the capacity to search for and accept employment. 64

Second, is the cost. Moving as far as the Economic Inclusion Advisory Committee recommends on JobSeeker and related payments could cost up to $4.6 billion per year, 65 which is not straightforward for governments balancing a range of competing priorities. But as targeted interventions to address poverty go, there is very little waste.

Finally, there is the question of community support. Boosting Jobseeker payments rarely garners majority support across the population. 66 As I have previously argued, this is less a case of Australians being mean spirited and more about the grip of some persistent and unhelpful myths about welfare recipients. Your regular community service reminder tonight: the median Jobseeker recipient is a 45-year-old woman. 67

Finding our inner Stretton

And with that I want to wrap up where I began, with Stretton’s legacy.

Inequality is one of those topics where it is easy to simply revert to tired tropes, particularly off shelf ones from elsewhere.

Stretton encouraged us to look with curiosity and rigour, but also with an open heart. In doing so, everyone may take away something different from the numbers and analysis I have shared tonight.

To me, there are bright spots in the story. Australia has grown its income and wealth over several decades, and it has shared those gains broadly. Social mobility is relatively high.

On the other hand, many relying on payments are in poverty and the long shadow of that experience can be hard for children to escape. Our schools and suburbs are becoming less of a springboard for mobility. And we have made the Australian dream out of reach for a generation of young people.

Policy matters – there are many levers that governments can pull to make a difference to these outcomes. But it is up to Australians to decide which ones we want them to use.

Footnotes

- Munro, D 2016, ‘The House that Hugh Built, the Adelaide history department during the Stretton era, 1954-1996’, History of Education vol. 46, no. 5, p.634.. Return to text

- Ibid, p.631. Return to text

- Spoehr, J 2015, ‘Hugh Stretton: a great Australian public intellectual’, The Adelaide Review, 7 September. Return to text

- Davison, G 2018, ‘Watching a brilliant thinker stretching his mind’, Inside Story, 11 October. Return to text

- Spoehr, J 2015. Return to text

- Gibilisco, P and Stretton, H 2003, ‘A pragmatic social democrat: an interview with Hugh Stretton’, The Journal of Australian Political Economy, vol. 51, pp. 13. Return to text

- The Guardian 2014, ‘Can the Hawking Index tell us when people give up on books?’, 8 July.Return to text

- Hoy, C, and Mager, F 2021 ‘Why are relatively poor people not more supportive of redistribution? Evidence from a randomized survey experiment across ten countries.’ American Economic Journal: Economic Policy, vol. 13, no. 4, pp. 299–328.Return to text

- Hobman, J 2022, ‘EXCLUSIVE: 'It barely cuts it': Aussie finance guru exposes why $200,000 a year is NOT a big salary anymore - despite most of the country earning MUCH less - but not everyone agrees’, The Daily Mail, 14 July; Martin, P 2021, ‘Other Australians don’t earn what you think. $59,538, is typical.’ The Conversation, 8 June. Return to text

- Coates, B and Ballantyne, A 2020, ‘No one left behind: Why Australia should lock in full employment’, Grattan Institute. The unemployment rate surged from 5.2% in March 2020 to a peak of 7.5% in July 2020. ABS (Australian Bureau of Statistics) 2024, ‘Labour Force, Australia, Detailed, February 2024’. Return to text

- The Australian Government provided JobKeeper payments of $1,500 per fortnight to eligible businesses, which had to be paid to their employees, to minimise job losses and maintain employment and job attachment. AIHW (Australian Institute of Health and Welfare) 2021, ‘Australia’s welfare 2021: data insights’, pp. 84–86. The flat payment of $1,500 per fortnight meant some people – particularly part time workers – received more than their salary, while for others it led to a reduction in their salary. Treasury 2023, ‘The Australian Government Independent Evaluation of the JobKeeper Payment Final Report’, 28 September. Return to text

- Hasell, J 2023, ‘Income inequality before and after taxes: How much do countries redistribute income?’, Our World in Data Return to text.

- Ibid. Return to text

- As Prime Minister, Bob Hawke made the declaration in 1987, with the intention to reach the goal by 1990. Hawke, B 1987, speech delivered at Sydney, NSW, June 23rd, Museum of Modern Democracy: Election Speeches. Return to text

- Davidson, P, Bradbury, B and Wong, M 2023, ‘Poverty in Australia 2023: Who is affected’, Poverty and Inequality Partnership Report no. 20, Australian Council of Social Service and UNSW Sydney. Return to text

- ABS (Australian Bureau of Statistics) 2022, ‘Survey of Income and Housing 2019-20’, Australian Government. Return to text

- Morton, R 2020, On Money, Hachette Australia, p.18. Return to text

- Davidson, P, Bradbury, B and Wong, M 2023. Return to text

- ‘Clarence Gardens Adelaide - Greater Region, SA 5039’, https://www.realestate.com.au/sa/clarence-gardens-5039/, accessed 12 May 2024. Return to text

- Leigh, A 2013, Battlers and Billionaires: The Story of Inequality in Australia, Schwartz Publishing, Melbourne, updated according to Productivity Commission estimates using Melbourne Institute data (Household, Income and Labour Dynamics in Australia (HILDA) Survey, Release 22). Return to text

- Estimate based on data from AFR (Australian Financial Review), ‘Rich List 2023’, https://www.afr.com/rich-list, accessed 12 May 2024. Return to text

- Shorrocks, Lluberas, Davies and Waldenström 2023, ‘Global Wealth Report 2023’, Credit Suisse and UBS Global Wealth Databook. Return to text

- See Productivity Commission 2024, ‘A snapshot of inequality in Australia’, Research paper, Canberra, pp. 25-30 for a discussion. Return to text

- Wood, D 2023a, ‘Creating a Better Future for Generation Next’, Grattan Institute, Giblin Lecture, Hobart, 30 August 2023. Return to text

- Coates, B 2022, ‘Levelling the playing field: it’s time for a national shared equity scheme’, Grattan Institute. Return to text

- Susan McKinnon Foundation 2023, ‘McKinnon Poll: Understanding attitudes towards housing in Australia’, September, p.90. Return to text

- Wood, D 2023a. Return to text

- The authors define a set of elite ‘rare’ surnames in 1900 as those surnames where 29 or fewer people held the name in Australia in 2014 in the voting roll, and where someone holding that name graduated from Melbourne or Sydney universities 1870-1899. Clark, G, Leigh, A, and Pottenger, M 2020, ‘Frontiers of mobility: Was Australia 1870–2017 a more socially mobile society than England?’, Explorations in Economic History, vol. 76. Return to text

- Ibid. Return to text

- Deutscher, N and Mazumder, B 2020, ‘Intergenerational mobility across Australia and the stability of regional estimates’, Labour Economics, vol. 66. Return to text

- Ibid. Return to text

- Cobb-Clark et al., 2022, ‘Intergenerational disadvantage: Learning about equal opportunity from social assistance receipt’, Journal of Labour Economics, vol. 79. Return to text

- Ibid, pp.16-17. Return to text

- Case, A and Deaton, A 2020, Deaths of despair and the future of capitalism, Princeton University Press, Princeton. Return to text

- TASCOSS 2022, ‘Wellbeing First: A budget proposal to ease the cost of living and invest in the long-term wellbeing of Tasmanians’, 2023-24 TASCOSS Budget Priorities Statement, p.12. Return to text

- Breunig, R and Taylor, M 2023, ‘Success in life is tied to parental education. That’s why we need to track intergenerational school performance’, The Conversation, 14 February. Return to text

- Hunter, J et al. 2023, ‘The Reading Guarantee: How to give every child the best chance of success.’ Grattan Institute. Return to text

- Ibid, p.10. Return to text

- Productivity Commission 2022a, ‘Review of the National School Reform Agreement’, Study Report, Canberra. Return to text

- The authors found a child whose family is amongst the 20% most disadvantaged has a probability of ending up in the top 20% of income earners of just 4.4% if they live in Charlotte North Carolina, but 12.9% if they live in San Jose, in the heart of Silicon Valley. Chetty, R et al. 2014, ‘Where is the land of opportunity? The geography of intergenerational mobility in the United States’, The Quarterly Journal of Economics, vol. 129, no. 4, November, pp. 1553-1623. Return to text

- Chetty, R and Hendren, N 2018, ‘The impacts of neighborhoods on intergenerational mobility I: Childhood exposure effects’, The Quarterly Journal of Economics, vol. 133, no. 3, August, pp. 1107-1162https://academic.oup.com/qje/article/133/3/1107/4850660?login=false Return to text

- Deutscher, N 2020, ‘Place, peers, and the teenage years: Long-run neighborhood effects in Australia’, American Economic Journal: Applied Economics, vol. 12, no. 2, pp. 220-49. Return to text

- Chetty, R et al. 2022, ‘Social capital I: Measurement and associations with economic mobility’, Nature vol. 608, pp. 108-121. Return to text

- Deaton, A, ‘What’s wrong with inequality?’, The IFS Deaton Review, panellist introduction, https://ifs.org.uk/inequality/themes/whats-wrong-with-inequality/, accessed 19 May 2024. Return to text

- Cerra et al. 2021, ‘Links between growth, inequality, and poverty: A survey’, IMF Working Papers, Working Paper No. 2021/068. Return to text

- Wilkinson, RG and Pickett, KE 2009, ‘Income inequality and social dysfunction’, Annual Review of Sociology, vol. 35, pp. 493–511. Return to text

- Protzer, ESM 2019, ‘Social Mobility Explains Populism, Not Inequality or Culture’, Center for International Development at Harvard University, Working Papers, no.118. Return to text

- Pearson Institute for International Economics 2019, ‘Combating inequality: Rethinking policies to reduce inequality in advanced economies’, conference papers, October 17 to 18, Washington DC. Return to text

- Cerra et al. 2021. Return to text

- Ibid. Return to text

- Weisstanner, D 2023, ‘Stagnating incomes and preferences for redistribution: The role of absolute and relative experiences’, European Journal of Political Research, vol. 62, pp. 551-570. Return to text